Deep Learning has provided a major boost to computer vision’s already rapidly expanding reach. A lot of new applications of computer vision technologies have been implemented with Deep Learning and are now becoming a part of our daily lives.

The shipping industry in particular has started to see the enormous benefits of this technology. As shipping and trading companies process tens of thousands of containers every day, Automatic Container ID and ISO Detection in real-time is the need of the hour.

The container identification system used is an ISO format composed of a series of letters and numbers. As the terminal gates and other checkpoints handle a large number of containers, there is always a possibility that the container identification sequence has not been properly followed. Human inspection and manual recording of the container ID and ISO are likely to cause errors. They hamper the speed of operation, particularly during the customs clearance verification process, in which customs officers and terminal operators have to deal with individual containers as they enter and leave terminals.

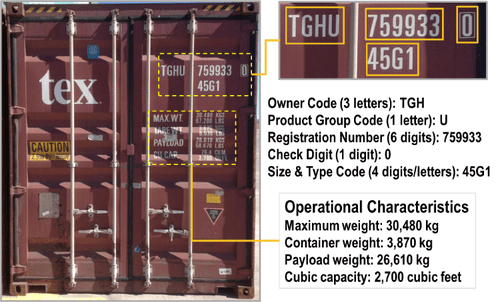

An overview of the Container Identification system:

Fig. 1. Image Credits: The Geography of Transport Systems by Jean-Paul Rodrigue

The container ID is an elevcn-digit number that comprises owner code, product group code, license number, and a check digit. ISO is an international norm of 4 digits which represents the container category and size. Each of these markings play a very important role in the transport of the container and provides valuable information to all organizations in the supply chain concerning the control and safety of the container.

Data Preparation:

Data collection is the process of gathering and measuring information on variables of interest. In order to train a model, we need sufficient and relevant amounts of data. And labeling is also an important part of any training. The training results will yield maximum accuracy only if the data labels are correct. For our purpose, the dataset was generated by collecting several container videos and dumping the images from these videos. The labeling of containers, text regions as well as characters were done using labeling apps. A few examples of labeling apps can be found here: LabelImg and LabelMe

The key modules of the project were:

- Container Detection

- Text Detection

- Character Detection

- Character Classification

1. Container Detection:

Object detection is a computer vision technique that helps to detect the objects within an image or video. Due to its close relationship with video analysis and image understanding, it has attracted much research attention in recent years. Numerous deep learning-based object detection frameworks are available for object detection tasks. You can get an overview of object detection algorithms using deep learning here – An overview of deep learning based object detection algorithms.

Fig. 2. Container detection

Fig. 2. Container detection

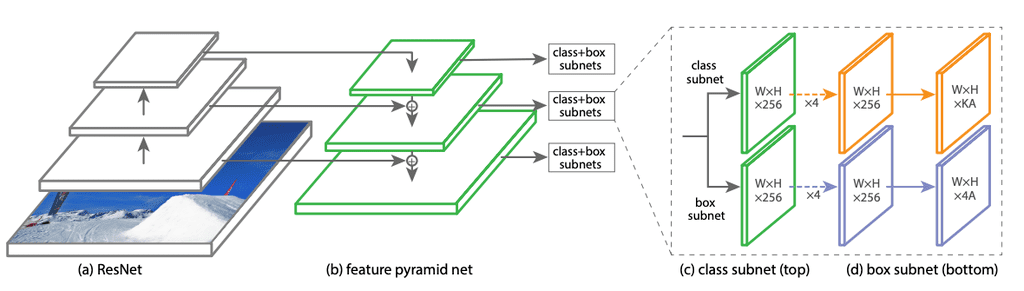

For container detection, a customized variant of the RetinaNet network is utilized. RetinaNet is a composite network which consists of a backbone network and two sub-networks. The backbone network is responsible for generating the convolutional feature maps of the image. One sub-network is responsible for generating classification results based on the output from backbone networks. And the other subnetwork is responsible for performing the regression task using the outputs from the backbone network. The pretrained weights used are from ResNet50. Here is a related post that will give a detailed explanation regarding the RetinaNet architecture.

Fig. 3. RetinaNet Architecture

For training and evaluating a RetinaNet model, two .csv files are required. The XML files generated while labeling containers are parsed and an annotation.csv file is generated. The annotation.csv file will contain the input image location, its bounding box values, and corresponding label. <path/to/image>, <xmin>, <ymin>, <xmax>, <ymax>, <label>. The classes.csv file will contain all class labels in the dataset which are unique, along with their corresponding index values. The input to the model will be these CSV files and once training is completed, a trained weight file will be saved. For making predictions, we convert this trained model into an inference model. While testing, it will return bounding box values of containers along with their corresponding scores and labels. The boxes can be filtered out by setting up a threshold value. For visualizing the outputs OpenCV components can be utilized.

2. Text Detection:

Once the containers are detected, the text regions corresponding to ID and ISO need to be detected. Because of the size, location, lighting, and texture changes of objects in the image, text detection from images have become one of the most difficult tasks in computer vision. Out of several object detection algorithms, the semantic segmentation algorithm performs well for text detection.

Semantic Segmentation:

Semantic segmentation is the task of understanding the semantic content in images. Semantic Segmentation has many applications, such as detecting road signs, detecting drivable areas in autonomous vehicles, etc.

Fig. 4. Text Detection using semantic segmentation

The semantic segmentation network follows an encoder-decoder architecture. Several pretrained models are also available. So the initial step is selecting the proper base network and segmentation network for semantic segmentation tasks. Along with choosing the required architecture for semantic segmentation, choosing the input dimension also has significance. If the input size is large it consumes more memory and training will be slower.

The mask images were generated from the annotated jsons. The feature vectors generated from the encoder will be given to the decoder model and the generated result vectors will be mapped to the original image shape using numpy functionalities. Several image processing techniques were also applied for mapping the results to the original image. The base repo is available here image-segmentation-keras.

3. Character Detection:

For character detection, a custom RetinaNet network was utilized. The input to the system is the annotation.csv file and classes.csv file. The annotation.csv file contains the bounding box annotations for each character and their corresponding image path. While testing, the input is the detected text crops and outputs can be visualized using OpenCV functions.

Fig. 5. Character Detection

Fig. 5. Character Detection

4. Character Classification:

For character classification, a custom CNN model was utilized. A convolutional neural network has several layers. An overview of convolutional neural networks can be found here (Convolutional Neural Networks) and here (Understanding of Convolutional Neural Networks) . For compiling the model, several optimizers like Adam and RMSprop can be used. Different metrics can be used for model evaluation during training like validation loss, train loss, Val accuracy and more. The loss value for the optimizer can be selected depending upon the problem statement.

The input to the CNN model are the character crops from the custom RetinaNet. It can be either digit crops or alphabet crops, which can be trained individually or separately.

Fig. 6. Container ID and ISO detection and classification

Accuracy:

The success of any system can be defined as the ability to detect and classify each module correctly. Analyzing the outcomes, the custom RetinaNet network gives better results with a minimum loss of 1.4. For text detection, the semantic segmentation detects the ID and ISO with an error of 1%. Character detection is done using the same RetinaNet model and the error was 0.5. The custom CNN model is lightweight compared to other classification networks like AlexNet and gives an accuracy of 99% for character classification.

Conclusion:

Automatic Container Code Recognition system is designed to automatically detect and recognize the container ID and ISO which will help reduce the disadvantages of manually recording them while they enter the container terminal gates.

The system will facilitate effective container management and operations at terminal gates, yard, and in the loading and unloading zones for cranes, etc. As a future scope for performance improvement, we can consider the replacement of multiple RetinaNet models with a single CRNN module to improve performance.

For further info, please visit www.ignitarium.com.

The post Automatic Container Code Recognition Using Deep Learning appeared first on NASSCOM Community |The Official Community of Indian IT Industry.

Fig. 2. Container detection

Fig. 2. Container detection