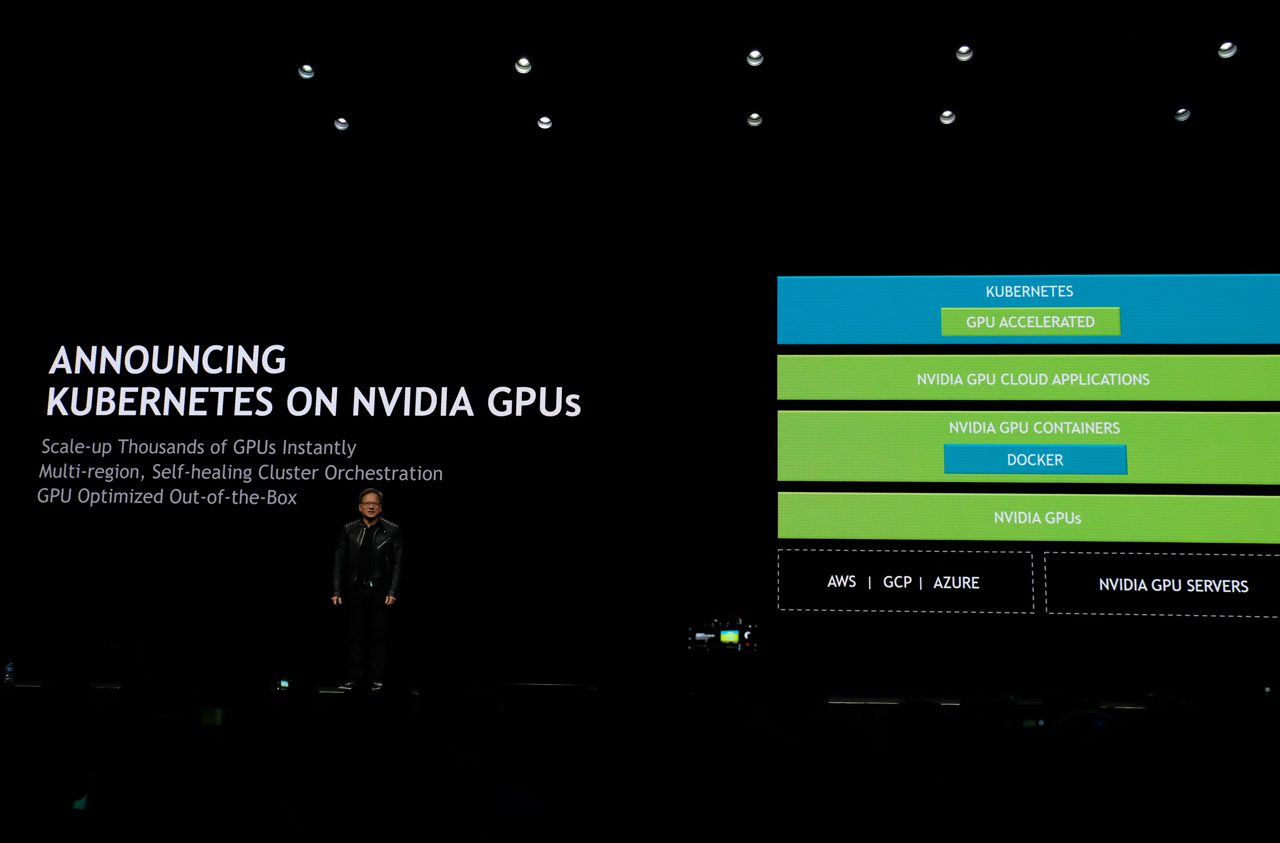

With graphics processing units (GPUs) gaining more ground in datacenter world to speed up the data-intensive workloads, Nvidia is pairing its GPUs with Kubernetes clusters. The company has made its release candidate Kubernetes on Nvidia GPUs freely available to developers.

Being the largest GPU maker in the world, Nvidia aims to help enterprises seamlessly train deep learning models on multi-cloud GPU clusters.

Announced at the Computer Vision and Pattern Recognition (CVPR) conference, the Kubernetes on Nvidia GPUs can automate the deployment, maintenance, scheduling and operation of containers across clusters of nodes.

“With increasing number of AI powered applications and services and the broad availability of GPUs in public cloud, there is a need for open-source Kubernetes to be GPU-aware,” wrote Nvidia in the announcement post. “With Kubernetes on NVIDIA GPUs, software developers and DevOps engineers can build and deploy GPU-accelerated deep learning training or inference applications to heterogeneous GPU clusters at scale, seamlessly.”

The new offering will be tested, validated and maintained by Nvidia. The GPU maker said that it can orchestrate resources on heterogeneous GPU clusters, and optimize the utilization of GPU clusters with active health monitoring.

Kubernetes on Nvidia GPUs is now freely available to developers for testing.

At the CVPR 2018, Nvidia also announced the latest version of its TensorRT runtime engine— TensorRT 4, to include new recurrent neural network layers for Neural Machine Translation apps, and new multilayer perception operations and optimizations for Recommender Systems. It has now been integrated with TensorFlow.

The company made available new libraries (Nvidia DALI and Nvidia nvJPEG) for data augmentation and images decoding.

“With DALI, deep learning researchers can scale training performance on image classification models such as ResNet-50 with MXNet,TensorFlow , and PyTorch across Amazon Web Services P3 8 GPU instances or DGX-1 systems with Volta GPUs,” wrote Nvidia.

Nvidia DALI is open-source and is now available on GitHub.